InvokeAI - The Stable Diffusion Toolkit

A downloadable tool for Windows

Requires a Nvidia Graphics Card (except GTX 1650).

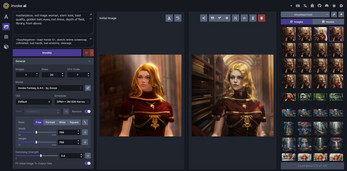

Stable Diffusion - Write anything, the AI paints it!

- Unlimited.

No censorship, unlimited pictures, use them for anything you want, 6s per image on a RTX 3060 (for SD1.5, SDXL will take longer) - No internet connection needed.

It runs entirely on your PC. - Free!

Click here to learn more about stable diffusion.

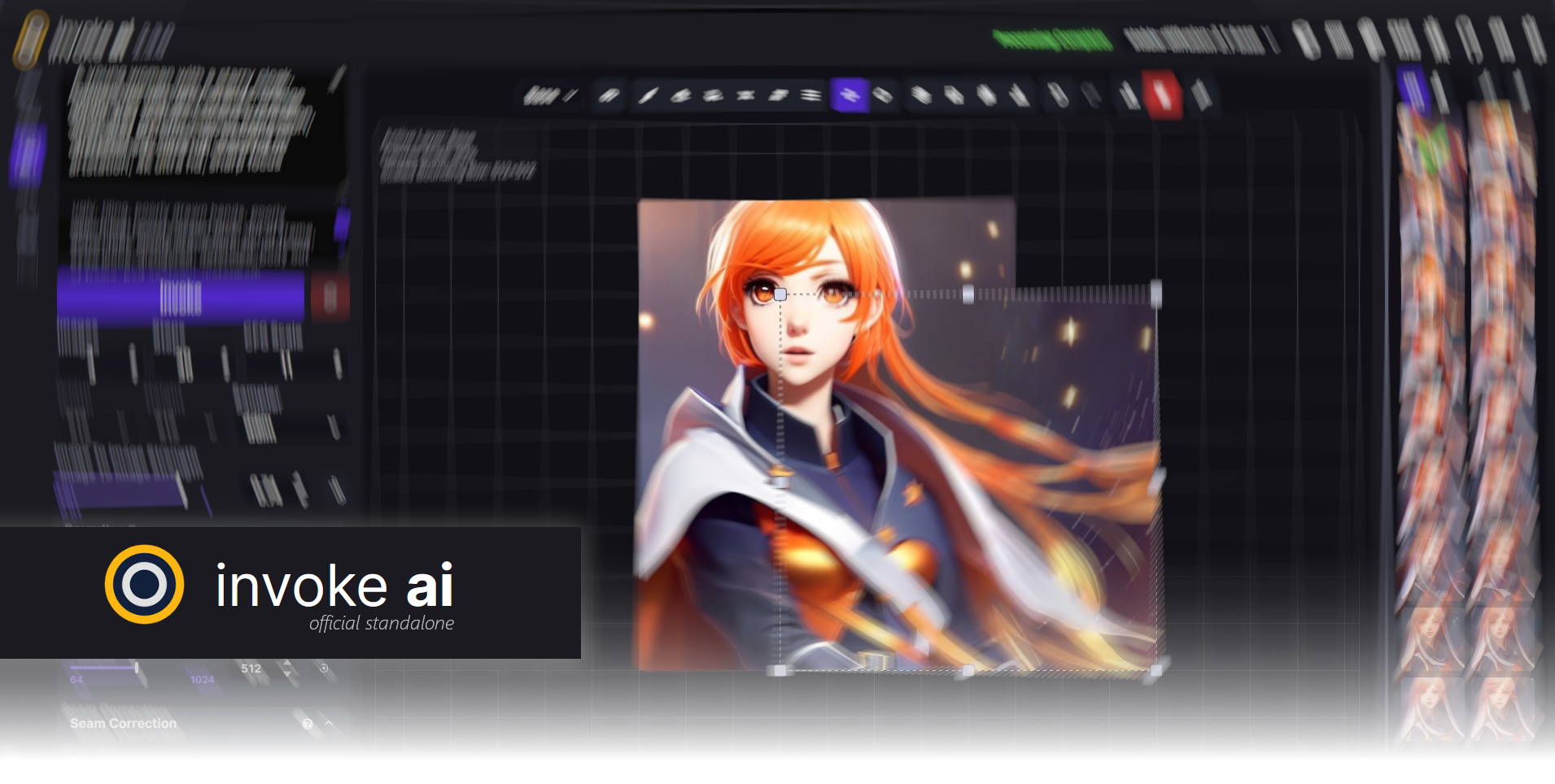

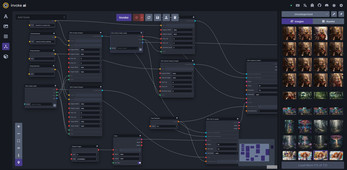

InvokeAI - Powerful & Easy UI

- Modern & easy to use.

UI with the workflow in mind. - So. Many. Features.

SDXL, ControlNet, Nodes, in/outpainting, img2img, model merging, upscaling, LORAs, ... There's barely anything InvokeAI cannot do. - Try more art styles!

Easily get new finetuned models with the integrated model installer! - Let your friends join!

You can easily give them access to generate images on your PC. Or use... - Tablet mode!

Run InvokeAI on your PC, and connect your tablet/smartphone to it. Generate images from your bed! - Free & open source.

Click here to learn more about InvokeAI.

About this standalone

- One-click-installer!

Just download, unzip, double-click. No command line, no python installation needed. - With a basic model.

Comes pre-packaged with a finetuned model! Download more (e.g. from civitai.com) and easily add them in the UI. Or give the integrated model downloader a try! - Open Source.

You can find the source code in the download. - Why this standalone?

This standalone is not affiliated with InvokeAI. I created it, because the normal InvokeAI installation can be tricky for new users. If you encounter problems with the standalone, join the official InvokeAI discord and ping @Sunija#6598.

♥ Share it with your friends! ♥

Need help with the installation?

Join the InvokeAI Discord and ping @Sunija#6598.

| Status | Released |

| Category | Tool |

| Platforms | Windows |

| Rating | Rated 4.6 out of 5 stars (95 total ratings) |

| Author | Sunija |

| Tags | ai, Generator, Graphical User Interface (GUI), image-generation, inpainting, invokeai, model, outpainting, stable-diffusion |

| Code license | MIT License |

| Average session | About a half-hour |

| Languages | German, English, Spanish; Latin America, French, Italian, Japanese, Dutch, Polish, Portuguese (Brazil), Russian, Ukrainian, Chinese (Simplified) |

| Multiplayer | Server-based networked multiplayer |

| Links | Blog |

Download

Install instructions

To unzip the file, you'll need 7zip (free) or WinRar.

Development log

- How to Update to 3.0.2 (SDXL Loras!)Aug 11, 2023

- SDXL with UI!Aug 01, 2023

- InvokeAI 3.0 - ControlNet!Jul 23, 2023

Comments

Log in with itch.io to leave a comment.

This is FANTASTIC!!! You're the BEST! Thanks for making it. Hoping to see version 5 in future too.

Many people are switched to the krita plugin.

https://github.com/Acly/krita-ai-diffusion

any ideas how fix this?

Help!my Invoke ai show me No UI found,i search for methon,but i cant find invoke.bat/invoke.sh,please teache me how to do? i update to the latest .

For some reason the update button does not work, I am just stuck at 3.2.0

When I click it, it launches cmd for a few seconds before it turns off...

...once I can implement an easy button to download the default models. :X

With my current internet I'd have trouble uploading the 30+gb package again.

Is their automated installer not working for you?

It starts, opens the browser and writes: {"detail":"Not Found"}DO you know how to do with this? i have the same problem

3.6 wrong

Is there a torrent file available?

For those having problems downloading the file, you were prolly using your browser, I downloaded the Itch.io app for Windows and downloaded the file from there, all good.

Hi, when I try to run the application, the command prompt window returns an error saying "Error migrating database from 1 to 2: Not a valid file or directory" and then lists the path of the Invoke Fantasy & Art folder, except on an E:\ drive instead of my D:\ drive where its extracted to.

Hey when i click launch, it crashes, but i did system test eveything is good! Need HELP please!!!

Can you send a screenshot to Sunija#6598 on discord?

will there be a 3.5 version?

You can update it within the starter. :)

thx mate ;). i tried 3.2 and got a "SERVER ERROR" message just when i invoke some prompts with the starting-model and cant get my models loading. Update to 3.5.1 didnt help. so i am stuck with my good old 3.0.

updating from 3.0 to 3.5.1 didnt work also

Any news on ROCm / AMD support?

So went to the newer version and I just can't get it to work. It won't load my models, I will add models and then it will say they Don't exist and when I open up my model manager they are now gone. Is there anyway I can get an older version?

2.3.5 worked well for me, and I just can't get this to work.

To be clear the error i keep getting is Model Not Found Exception, and then my model is gone, like it was removed from Invoke, but it is still in my file location on my computer

A matching Triton is not available, some optimizations will not be enabled.

Error caught was: No module named 'triton'

i CANNOT get this to work, it's giving me SERVER ERROR i'm not a coder, i'm not a programer, EVERYWHERE I GO it's like "open console this, input this code somewhere" I DON'T FUCKING KNOW WHAT ALL OF THAT MEANS.

2.3 version worked just fine, i downloaded it and opened and it WORKED... can you please help me with this bullshit? or help me find the standalone for 2.3.5?

May I ask about something.

In older versions (mine was

2.42.3.5 I think), there used to be options for upscaling, fixing faces, etc.Are these options no longer available on the new version?

After I updated the app, these options disappeared and the interface as a whole contain less options and have some flaws (previously, in gallery, I used to move from one image to the next using the arrow keys).

Thanks!

Those options were removed from Invoke proper, so yes, they're no longer in the standalone as a result.

That's a bummer. The tool was literally the easiest to use.

With very few options, you could generate fully usable images. Now, you have to go through multiple tools to fix things :(

Thank you for the reply bud!

Here´s hoping you make a 3.1 package :)

3.1 is not coming

BUT

well, how about 3.2 then? :P

Hey whenever I start Invoke im getting this message from my antivirus:

Stopping connection to tcp://someIpadress, because It was infected with Botnet:Blacklist

\invokeai\invokeai3_standalone\env\python.exe.

The program starts normally though and works as intended.

First, thanks for the awesome installer. Everything was great, installed some more models, but it says it can't use them. Tried to uninstall and re-install them with no success. Any ideas would be greatly appreciated.

when standalone version 3.1 will release ??

Error caught was: No module named 'triton'

If you're on Windows it's unnecessary.

Is there any chance you can make the 2.3.5 version available again?

Always loading...

3900x

2070super

64G

SSD 4T

windos11

Is it possible to allow for older version downloads, I would like to use the 2.3.5 as I keep running into issues with merging my diffuser models (exception in ASGI application) until 3.0 stabilises.

I sadly don't have it uploaded anymore, because the space on my server is limited. :(

If you send me some google drive or dropbox link (via DM), then I can upload it there. :) Should have around 10 GB of free space.

when we install from itch, is the install file saved after install, in case an update corrects it and you need to reinstall?

I think i'm kinda blind, or does the new version no longer has a high res fix.

Yes, no high res fix as well as no face restore. And the SDXL models need 3x time to render. I stay with 2.3.5 till 3.0.X is in a good condition.

update.bat (yes the fix version) ruined my install.. I´ll just wait for 3.1 instead and download the whole package again then...

While running the upate.bat file an error occurs

Loading...

Traceback (most recent call last):

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\runpy.py", line 196, in _run_module_as_main

return _run_code(code, main_globals, None,

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\runpy.py", line 86, in _run_code

exec(code, run_globals)

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\Scripts\invokeai-web.exe\__main__.py", line 4, in <module>

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\app\api_app.py", line 22, in <module>

from ..backend.util.logging import InvokeAILogger

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\backend\__init__.py", line 4, in <module>

from .generator import InvokeAIGeneratorBasicParams, InvokeAIGenerator, InvokeAIGeneratorOutput, Img2Img, Inpaint

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\backend\generator\__init__.py", line 4, in <module>

from .base import (

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\backend\generator\base.py", line 9, in <module>

import diffusers

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\diffusers\__init__.py", line 3, in <module>

from .configuration_utils import ConfigMixin

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\diffusers\configuration_utils.py", line 34, in <module>

from .utils import (

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\diffusers\utils\__init__.py", line 21, in <module>

from .accelerate_utils import apply_forward_hook

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\diffusers\utils\accelerate_utils.py", line 24, in <module>

import accelerate

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\accelerate\__init__.py", line 3, in <module>

from .accelerator import Accelerator

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\accelerate\accelerator.py", line 35, in <module>

from .checkpointing import load_accelerator_state, load_custom_state, save_accelerator_state, save_custom_state

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\accelerate\checkpointing.py", line 24, in <module>

from .utils import (

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\accelerate\utils\__init__.py", line 133, in <module>

from .launch import (

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\accelerate\utils\launch.py", line 23, in <module>

from ..commands.config.config_args import SageMakerConfig

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\accelerate\commands\config\__init__.py", line 19, in <module>

from .config import config_command_parser

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\accelerate\commands\config\config.py", line 25, in <module>

from .sagemaker import get_sagemaker_input

File "D:\Deep Fusion\invokeai\invokeai3_standalone\env\lib\site-packages\accelerate\commands\config\sagemaker.py", line 35, in <module>

import boto3 # noqa: F401

File "C:\Users\jaysh\AppData\Roaming\Python\Python310\site-packages\boto3\__init__.py", line 17, in <module>

from boto3.session import Session

File "C:\Users\jaysh\AppData\Roaming\Python\Python310\site-packages\boto3\session.py", line 17, in <module>

import botocore.session

File "C:\Users\jaysh\AppData\Roaming\Python\Python310\site-packages\botocore\session.py", line 26, in <module>

import botocore.client

File "C:\Users\jaysh\AppData\Roaming\Python\Python310\site-packages\botocore\client.py", line 15, in <module>

from botocore import waiter, xform_name

File "C:\Users\jaysh\AppData\Roaming\Python\Python310\site-packages\botocore\waiter.py", line 16, in <module>

import jmespath

ModuleNotFoundError: No module named 'jmespath'

I have no idea what this is? Please help..

encountering this error when using a custom model from civitai.com

[2023-08-11 03:51:29,258]::[InvokeAI]::ERROR --> Traceback (most recent call last):

File "C:\Users\crdbrdmsk\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\app\services\processor.py", line 86, in __process

outputs = invocation.invoke(

File "C:\Users\crdbrdmsk\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\torch\utils\_contextlib.py", line 115, in decorate_context

return func(*args, **kwargs)

File "C:\Users\crdbrdmsk\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\app\invocations\compel.py", line 81, in invoke

tokenizer_info = context.services.model_manager.get_model(

File "C:\Users\crdbrdmsk\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\app\services\model_manager_service.py", line 364, in get_model

model_info = self.mgr.get_model(

File "C:\Users\crdbrdmsk\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\backend\model_management\model_manager.py", line 484, in get_model

model_path = model_class.convert_if_required(

File "C:\Users\crdbrdmsk\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\backend\model_management\models\stable_diffusion.py", line 123, in convert_if_required

return _convert_ckpt_and_cache(

File "C:\Users\crdbrdmsk\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\backend\model_management\models\stable_diffusion.py", line 283, in _convert_ckpt_and_cache

convert_ckpt_to_diffusers(

File "C:\Users\crdbrdmsk\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\backend\model_management\convert_ckpt_to_diffusers.py", line 1740, in convert_ckpt_to_diffusers

pipe = download_from_original_stable_diffusion_ckpt(checkpoint_path, **kwargs)

File "C:\Users\crdbrdmsk\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\backend\model_management\convert_ckpt_to_diffusers.py", line 1257, in download_from_original_stable_diffusion_ckpt

logger.debug(f"original checkpoint precision == {checkpoint[precision_probing_key].dtype}")

KeyError: 'model.diffusion_model.input_blocks.0.0.bias'

[2023-08-11 03:51:29,263]::[InvokeAI]::ERROR --> Error while invoking:

'model.diffusion_model.input_blocks.0.0.bias'

any fix for this? first time using this ai

I can't find the "force image" slider option in "image to image" in the NEW SDXL invokeai3_standalone, It's still there Or am I completely dumb to find it? :(

I think it's called "Denoising Strength". And yeah, that's a really confusing name. :/

Confirmed, "Denoising Strength" is now force image. in a happy note, im not dumb ;D

cant load up invokeai at all, tried the patch as well as renaming python folder

edit: this shows when launching the commandline.bat and helper.bat

the update.bat just closes instantly

Official standalone.

Loading...

Traceback (most recent call last):

File "Q:\Invoke\AI\invokeai3_standalone\env\lib\runpy.py", line 196, in _run_module_as_main

return _run_code(code, main_globals, None,

File "Q:\Invoke\AI\invokeai3_standalone\env\lib\runpy.py", line 86, in _run_code

exec(code, run_globals)

File "Q:\Invoke\AI\invokeai3_standalone\env\Scripts\invokeai-web.exe\__main__.py", line 4, in <module>

ModuleNotFoundError: No module named 'invokeai.app'

Press any key to continue

Thanks for reaching out! :)

Did you try to start the starter (invokeai_starter.exe) before that? The starter sets some required variables first.

yes, it was the first thing i tried, thats when i first saw the error, sorry for not clarifying.

Sunija, thanks a lot for the new upload!

Hi. Can I ask of you, if it's not too much trouble and due to how absolutely garbage my internet connection is, to upload either a far more compressed version of invokeai3_standalone.7z (13.5GB, v3.0.0), so it's size approaches that of the previous 8.15GB zip, or a several parts compressed set of both this 7z file and [NEW SDXL] invokeai3_standalone.7z (26 GB, v3.0.1), or torrents for them both, and/or a list of compounding files (i.e: github repositories, huggingface/civitai models, etc..) as to facilitate assemblage of a standalone?

There are many Youtube Tutorials for downloading and assemblage of all required files.

Any good recommendation?

https://invoke-ai.github.io/InvokeAI/installation/010_INSTALL_AUTOMATED/ or

Thanks for reaching out!

If you use the itch.io/app you might be able to download the file in parts. I think. :X

I *could* maybe make a "minimal version" that does not contain the control net models and the SDXL models. Then we can go down to 8 GB again. InvokeAI contains a downloader (it's in the commandline, but kinda usable) so you could download the models after that.

Oh, no, no, I was talking about which models did you built the standalone with (and any other libraries or such requirements) since, let's just say, anything that requires a download of stuff to "install" itself is a big no-no in my setup, so I want to assemble a "ready to build completely offline" standalone.

I'm not sure if I understood you completely yet. ^^'

But on the github page of my standalone, there's an instruction on how to create the standalone yourself. It's a bit more "techy", because it requires you to do stuff in the command line. I can send you the compiled starter and batch files via Discord (Sunija#6598), I think I didn't add those to the repo yet. :X

You can find the github page here. Is that what you need?

Can we please get an update!

It's uploading and will be available in 2.5h here. :)

(If you start downloading before that, you'll get an incomplete file.)

Thank you for the response I thought I was getting ignored all this time.

Jesus, 26gbs? :P Any easy update files? I don´t need the XDSL checkpoints, have them :)

I cannot use img2img and unified canvas.

[2023-07-30 10:33:23,764]::[InvokeAI]::ERROR --> Traceback (most recent call last):

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\app\services\processor.py", line 70, in __process

outputs = invocation.invoke(

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\torch\utils\_contextlib.py", line 115, in decorate_context

return func(*args, **kwargs)

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\invokeai\app\invocations\latent.py", line 762, in invoke

image_tensor_dist = vae.encode(image_tensor).latent_dist

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\diffusers\utils\accelerate_utils.py", line 46, in wrapper

return method(self, *args, **kwargs)

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\diffusers\models\autoencoder_kl.py", line 236, in encode

h = self.encoder(x)

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\diffusers\models\vae.py", line 139, in forward

sample = down_block(sample)

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\diffusers\models\unet_2d_blocks.py", line 1150, in forward

hidden_states = resnet(hidden_states, temb=None)

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\diffusers\models\resnet.py", line 596, in forward

hidden_states = self.norm1(hidden_states)

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\torch\nn\modules\normalization.py", line 273, in forward

return F.group_norm(

File "C:\Users\Irfan\AppData\Roaming\itch\apps\invokeai\invokeai3_standalone\env\lib\site-packages\torch\nn\functional.py", line 2530, in group_norm

return torch.group_norm(input, num_groups, weight, bias, eps, torch.backends.cudnn.enabled)

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 256.00 MiB (GPU 0; 4.00 GiB total capacity; 2.95 GiB already allocated; 0 bytes free; 3.24 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

You are running out of memory. Maybe try to make smaller images first, then try to increase the size until it crashes, so you know the limit of your graphics card. :3

A matching Triton is not available, some optimizations will not be enabled.

Error caught was: No module named 'triton'

what is this?

from [NEW] invokeai3_standalone.7z

False error. :)

Triton is only available on Linux, so this error should not even appear on Windows.

I have the same error. Windows 10 System.

Will this be updated to the latest version 3.0.1?

this please. SDXL in regular UI is a must.

So I wonder how long this would take on a GTX 750, XD

I don't know about GTX 750, but I tried GTX 750 TI 4GB (750 TI has 2 GB models I believe). It's slow, but not dumb slow. Desc said "6s per image on a RTX 3060.", my 750 TI took 20s-ish.